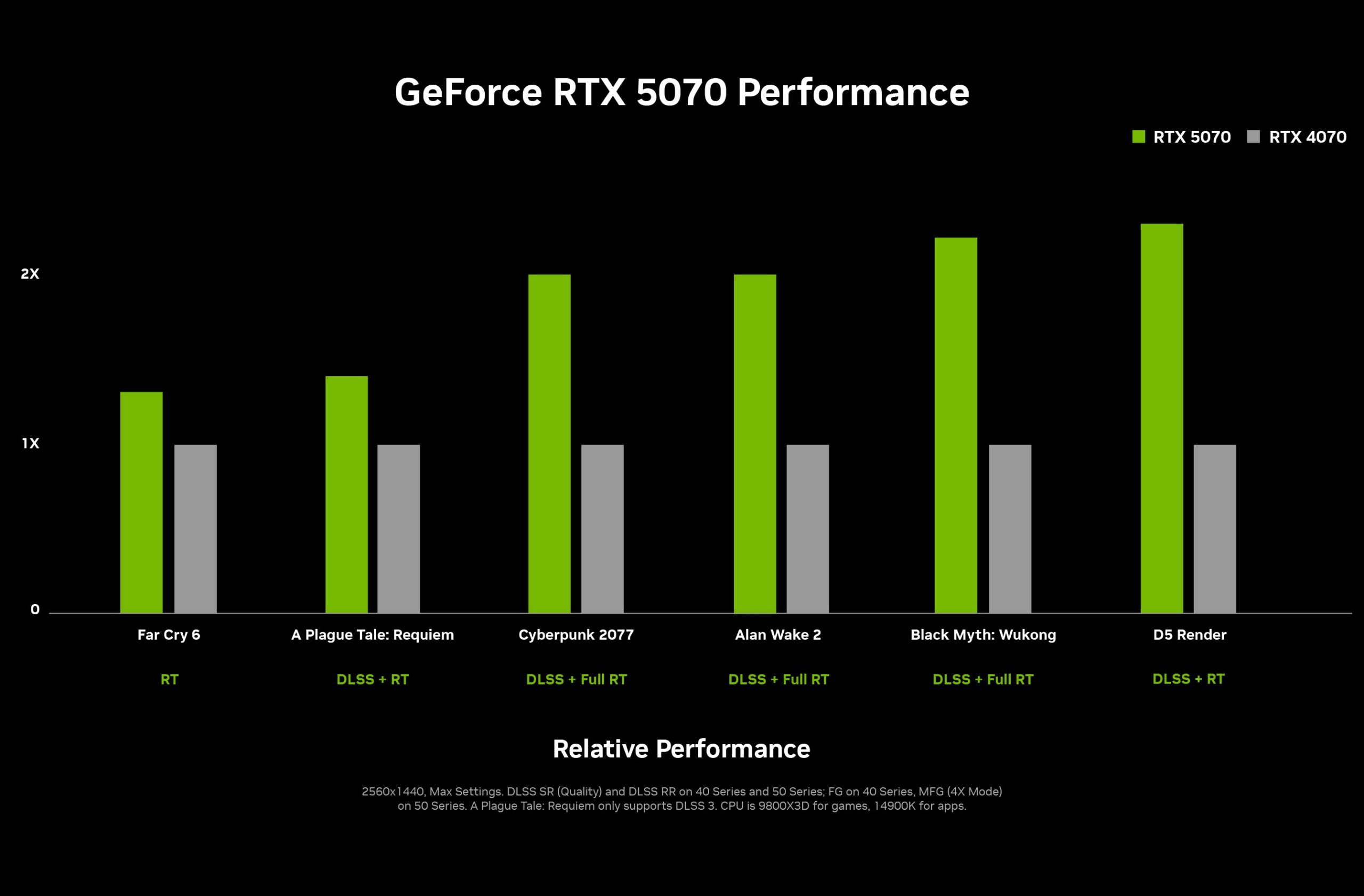

*With heavy DLSS and frame generation. Total bullshit and false marketing by Nvidia IMO.

PC Gamer left out the charts that really show it; everything to the right of A Plague Tale: Requiem has extra generated frames on the 5070:

This is compared to a 4070 though.

Point is the gains are mostly from the new frame generation. The difference between a 4070 and a 4090 is much more than gains they’re showing without the new FG.

Nvidia is lying their asses off.

DLSS without frame generation is at least equivalent (sometimes superior) to a native image though. If you’ve only ever seen FSR or PSSR with your own eyes, you might underestimate just how good DLSS looks in comparison. [Xess is a close second in my opinion - a bit softer - and depending on the game’s art style, it can look rather pleasing, but the problem is that it’s relatively rarely being implemented by game developers. It also comes with a small performance overhead on non-Intel cards.]

Frame generation itself has issues though, namely latency, image stability and ghosting. At least the latter two are being addressed with DLSS 4, although it has to be seen how well this will work in practice. They also claim, almost as a footnote, that while frame rates are up to eight times higher than before (half of that through upscaling, half through three generated frames per real frame, from one inserted frame on the previous generation - which might indicate the raw processing power of the 5070 is half of the 4090), latency is “halved”, so maybe they are incorporating user input during synthetic frames to some degree, which would be an interesting advance. I’m speculating though based on the fluff from their press release:

https://www.nvidia.com/en-eu/geforce/news/dlss4-multi-frame-generation-ai-innovations/

Before anyone accuses me of being a shill for big N, I’m still on an old 2080 (which has DLSS upscaling and ray reconstruction, but not frame generation - you can combine this with AMD’s frame generation though, not that I’ve felt the need to do this so far) and and will probably be using this card for a few more years, since it’s still performing very well at 1440p with the latest games. DLSS is one of the main reasons it’s holding up so well, more than six years after its introduction.

DLSS without frame generation is at least equivalent (sometimes superior) to a native image though.

It really isn’t though. DLSS produces artifacts, especially for quick camera movements as well as things like hair and vegetation. Those artifacts get heavier the smaller the native rendering resolution is. It also differs quite a lot between implementations. In some games it looks better, in others worse (e.g. Dragon’s Dogma 2 and Stalker 2).

But my point wasn’t to bash DLSS anyway. It’s a good technology, especially for lower powered devices. I use it in many games on my 2070s. But Nvidia using this technology to claim “4090 performance” on a card that really has far less power than a 4090 is dishonest and misleading. To make an honest comparison, you’d use the same settings and parameters on both cards.

Dlss completely hid a small thing I needed to find in a puzzle game. There are game design drawbacks to having the gpu overwrite a bunch of the graphics. A spiffed up image that looks like the game you are playing isn’t necessarily preserving details you need.

Huh, I’ve never heard of that before. Do you have screenshots? Which game at which resolution and DLSS setting?

It was the circular oasis level of talos principle 2 on a WQUXGA display. I didn’t capture screenshots. https://eip.gg/guides/the-talos-principle-2-south-3-star-statue-puzzles/ If you look at images 9 and 14, the little prism that the red beam connects to was completely erased by dlss. I had to just scan around until the icon for establishing a connection popped up.

Interesting, thank you! Which DLSS setting were you using?

It’s been a while so I’m not sure I remember but probably balanced with dlss on. That game doesn’t expose much for dlss specific settings in the ui.

Yes, DLSS/FSR/XeSS are great, but are there bcs of lack of performance, a substitute to lowering resolution.

I would never use it if I didn’t have to.

I’ve only ever noticed slight shimmering on hair, but not movement artifacts. Maybe it’s less noticeable on high refresh rate monitors - or perhaps I’m blind to them, kind of how a few decades ago, I did not notice frame rates being in the single digits…

This hair shimmering is an issue even at native resolution though, simply due to the subpixel detail common in AAA titles now. The developers of Dragon Age The Veilguard solved the problem by using several rendering passes just for the hair:

This technique involves splitting the hair into two distinct passes, first opaque, and then transparent. To split the hair up, we added an alpha cutoff to the render pass that composites the hair with the world and first renders the hair that is above the cutoff (>=1, opaque), and subsequently the hair that is lower than the cutoff (transparent).

Before these split passes are rendered, we render the depth of the transparent part of the hair. Mostly this is just the ends of the hair strands. This texture will be used as a spatial barrier between transparent pixels that are “under” and “on top” of the strand hair.

Source:

https://www.ea.com/technology/news/strand-hair-dragon-age-the-veilguard

Which is sad, because the 3070 and 2070 absolutely traded blows with the previous flagships.

The “4070” might’ve also had a chance if they hadn’t fk’ed around with the naming on most cards below the 4080.

Removed by mod

I’m assuming you mean that you disable DLSS frame generation, since the upscaling and ray reconstruction DLSS results in an improved “real” frame rate, which means that the actual input latency is lowered, despite about 5% added input lag per frame. Since you’re almost always guaranteed to gain far more than 5% in performance with DLSS, these benefits eliminate the overhang.