Fully validating hardware is an insane task that hasn’t been really done in years. It would mean 5 years between chip releases and a 2-5X in cost to produce, and people wouldn’t follow the validated configs anyways. If we followed the validated hardware spec we would have 50 min boot times and not go past a 3.5Ghz clock.

People have the choice today on if they want to run on validated hardware. You can opt in to get a 2.8Ghz part that supports 2666MT/s that is mostly tested and validated, or you can get a 5Ghz part that supports 6000MT/s that is only partially validated. They cost the same price. What do folks think people pick?

Could someone please explain to a non-tech expert?

This is about Spectre, not about buggy hardware implementations.

Spectre is a fundamental flaw in speculative execution that means it can leak information, so it’s a security vulnerability. Apparently Intel has been imposing draconian requirements on software to work around the issue rather than fixing it in hardware, which is obviously what they should do, but is not at all trivial.

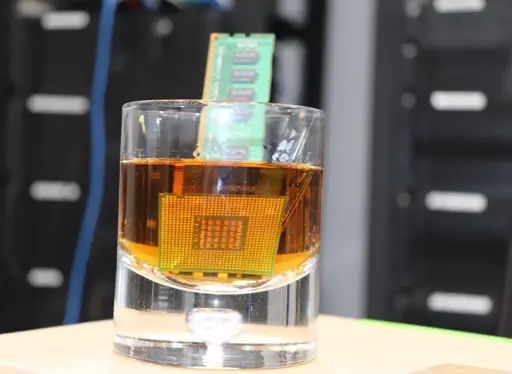

hardware is like your computer, stuff like the cpu and ram. software is like the programs on that computer. linus torvalds makes a program that has to deal with the details of the computer (the linux kernel). as such, they have to work around problems in the hardware.

Thanks for the unappreciated ELI2

fuck

I’m a graybeard software engineer with 30+ years of experience.

I appreciated your explanation.

Me too; it’s BECAUSE I’m so old that I appreciate a general rooting what this is all about.

In the last 10 years there has been a seemingly noteworthy uptick in hardware bugs in both intel and amd CPUs. Security researchers find and figure out potential attack vectors that rely on these bugs (ex. Specter/Meltdown). Then operating systems have to put workarounds in their kernel code to ensure that these hypothetical attack vectors are accounted for, at the cost of performance and more complicated code.

Linus is saying how annoyed he is with all this extra work they have to do, resulting in worse performance, all to plug vulnerabilities that we’ve never actually seen any real attackers use. He’s saying instead we should just write the code how it should be, and if the hardware is insecure, let it be the hardware company’s problem when customers don’t use the hardware.

The problem is, customers will continue to use the hardware and companies who need a secure OS (all of them) will opt to not use Linux if it doesn’t plug these holes.

Plus a lot of these bugs don’t get fixed, because they exist to allow the processors to “look ahead” for improved performance, at least on unmitigated benchmark tests.

we should just write the code how it should be

Notably, that’s not what he says. He didn’t say in general. He said “for once, [after this already long discussion], let’s push back here”. (Literally “this time we push back”)

who need a secure OS (all of them) will opt to not use Linux if it doesn’t plug these holes

I’m not so sure about that. He’s making a fair assessment. These are very intricate attack vectors. Security assessment is risk assessment either way. Whether you’re weighing a significant performance loss against low risk potentially high impact attack vectors or assess the risk directly doesn’t make that much of a difference.

These are so intricate and unlikely to occur, with other firmware patches in line, or alternative hardware, that there’s alternative options and acceptable risk.

Hardware people sounds like a euphemism for protogen.

eww put an nsfw tag on that