Five of the world‘s most popular language models from four of the world’s top AI companies each confabulate/hallucinate an incorrect answer to a question about a nonexistent “Marathon Crater“.

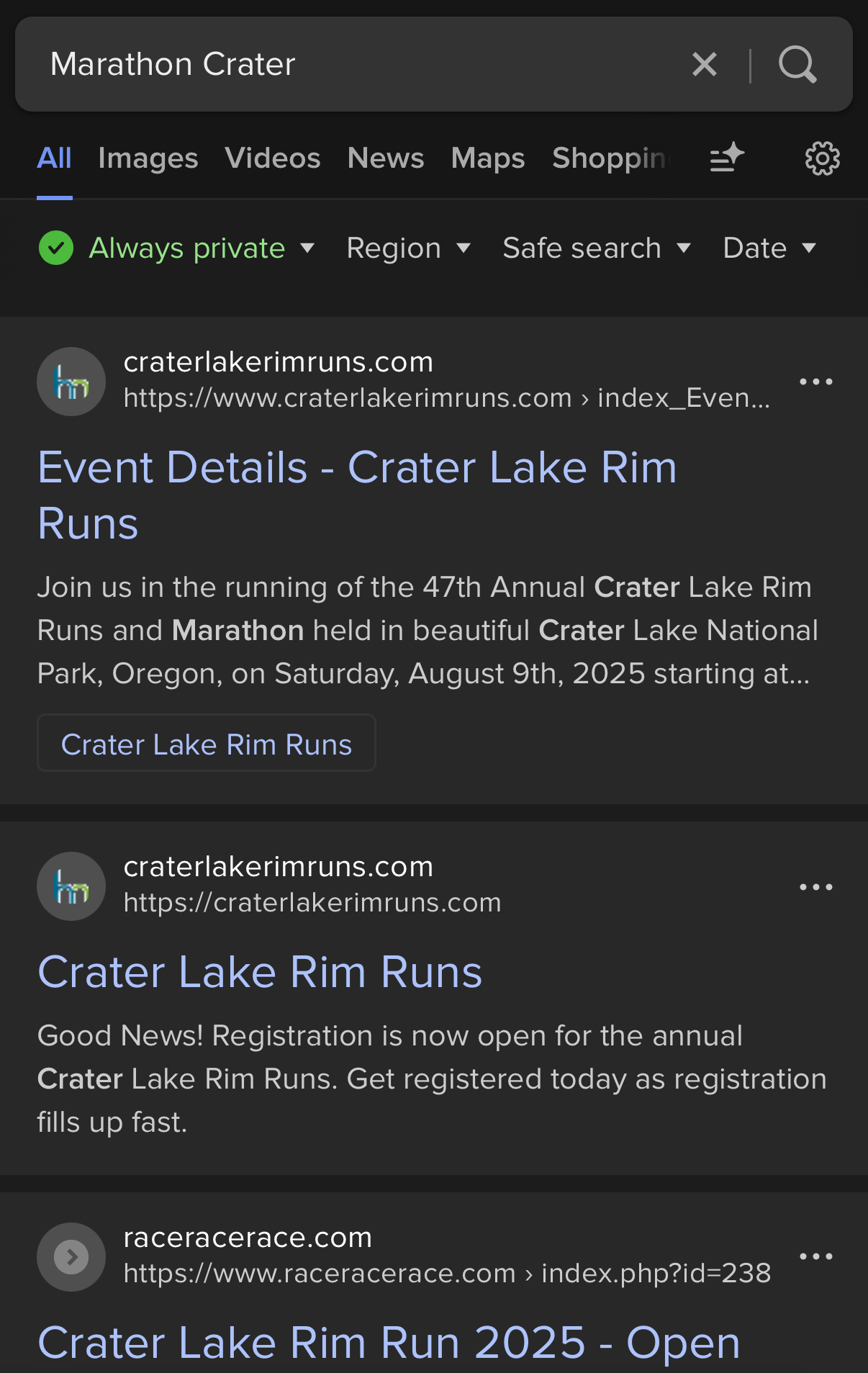

Web search screenshot attesting to its non-existence:

Q&A:

Q: If they all fix this by 100 years from now, th—-

A: then is it still “fun” to poke fun now?, yes

[edit: typo]

How is it fixed? There is no “Marathon Crater” in Ontario, Canada. It just spewed out far more persuasive bullshit with even more confidence than the others.

The link should work without me (or you) being logged in. It’s a free tier conversation. (I’m not going to pay for degenerative AI!)

…

Huh. OK, more fool me. I shared the wrong link. I’ll fix that. Try again and tell me if it works now.

Thank youuu, edited!

Ahahaha that conversation! and it ends with telling you it could be a good idea to ask it for facts, ha!

It’s kinda garbage at conversing btw, I think because it’s too expensive for them, compared to re-prompting in a fresh window. But as we have seen very clearly… fresh window or not, buyer (or not) beware!

That is to say some will find value using the tool a certain way as long as they don’t think too hard about theft/climate/etc. :)

Yeah, I pushed it HARD on figuring out that it fucked up on the crater and it never caught on until I flatly told it.

It hallucinated a LOT of information about it too!